Adaptive Machine Translation with Large Language Models

The Global NLP Lab is a newsletter covering the latest in Natural Language Processing

Translation quality is an essential aspect of successful communication across cultures and languages. And with the advent of AI, machine translation (MT) has become increasingly more prominent in bridging this gap. However, real-time translation that can adapt to changes in context and terminology remains a challenging task.

Today, we will take a look at a new method for real-time adaptive machine translation which utilises large language models and in-context learning.

We also made a video covering this topic, check it out.

Introduction

Large language models have the potential to revolutionise the way we approach machine translation via "in-context learning". This technology allows the model to learn certain input-output text generation patterns without the need for additional fine-tuning. By feeding an LLM with a list of approved translations and terminology, the model can mimic the domain and style characteristics during inference time.

In the current paper, the authors propose a translation system that leverages two components. The first component is an off-the-shelf GPT-3 model, the authors use the latest 175 billion model here. The model is used to directly translate across the languages in a few-shot manner, using a few provided pairs of input-output sentences in the target language pair. The second key component is the use of translation memories. Instead of just providing the same example translations for each input, the authors pick examples that are close to the input. The examples are retrieved using embedding-based similarity, from a dataset of translations in the same domain as the input.

Results

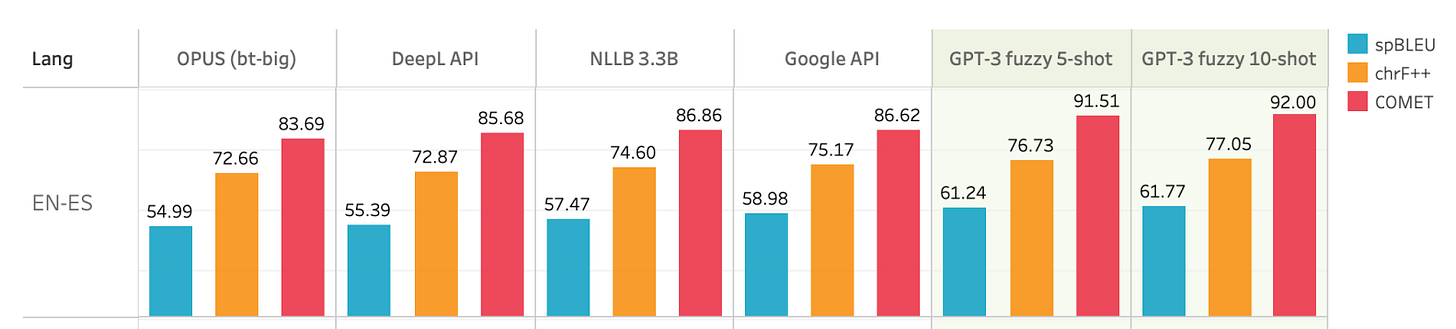

The authors conduct experiments with many high-resource and low-resource translation pairs, comparing the adaptive translation system to strong open-source encoder-decoder translation systems, as well as to popular translation services such as Google Translate and DeepL.

The adaptive translation approach outperformed all the other systems, in particular for high-resource language pairs such as English to Spanish or English to French. For low-resource pairs such as English-to-Kinyarwanda, the approach was less effective. The performance improves when more translations are added to the context: the best performance is achieved with 10 translations.

In the paper, the authors explore several variants of this system, including combining it with an existing encoder-decoder translation model for post-editing, as well as adding terminology terms to the context to help the translation adaptation. Check out the paper to find out more.

Conclusion

So to sum it up. The paper explores the use of large language models for adaptive machine translation. The results are promising, demonstrating a major benefit of in-context learning, which allows for on-the-fly customisation according to customer requirements. It could be that, in the future, all machine translation systems are adaptive, meaning that they are able to learn from past interactions with us, and are able to adjust to our preferred use of terminology and style.

Thanks for reading! If you are looking for state-of-the-art expertise in Natural Language Processing, you should check out our services at The Global NLP Lab.